|

|

Q&A

Q&A

BACK TO OMNI Q&A ARCHIVES

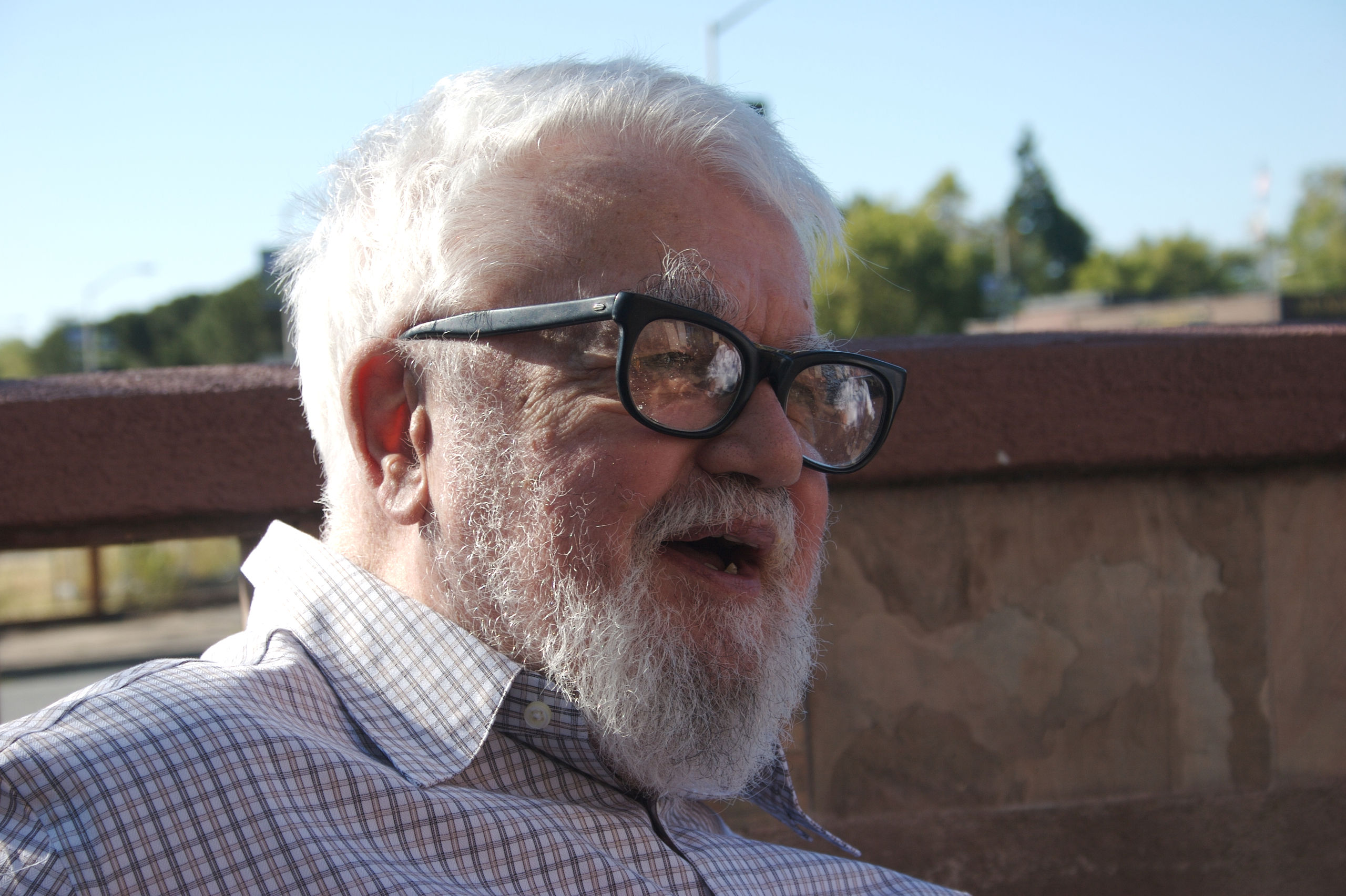

John McCarthy on Artificial Intelligence

by Philip J. Hilts

|

John McCarthy, the fifty-five-year-old director of Stanford University’s Artificial Intelligence Laboratory, is, in a sense the father of all close relations between humans and computers. It was McCarthy who, while organizing the first conference on the subject at Dartmouth in the summer of 1956, invented the term “artificial intelligence” to describe the then emerging field. McCarthy also has the distinction of having founded two of the world’s three great laboratories of artificial intelligence: the MIT laboratory, in 1957 with Marvin Minsky; and the Stanford laboratory, in 1963. (The third is part of Carnegie-Mellon University.)

While at MIT, McCarthy also invented a kind of computer time-sharing, called interactive computing. in which a central computer was connected to multiple terminals — the first practical one-to-one relation between a computer and its many users, each of whom could feel he had the machine all to himself. In 1958 he also created the computer language LISP (List Processing Language) — the successor to the mathematical language of FORTRAN — in which most “intelligent” computer programs have been written. He founded a subbranch of mathematics called “the semantics of computation,” and solved its first significant problems, such as how to test certain classes of complicated computer programs to see if they were correct, and how to “crunch down,” or simplify, the number of steps involved in certain very complex computer operations. Over the past twenty five years McCarthy has created a continuing succession of ideas that have been turned into computer hardware or programs.

Last summer, at the annual meeting of the National Conference on Artificial Intelligence, McCarthy and Minsky — the two founders of the field — confronted one another publicly, as they have privately over the years, on the real problem of artificial intelligence: how to give machines common sense. There now exist industrial robots with limited abilities, and so-called “expert systems” — computer programs that can mimic the analytical procedures a doctor performs in diagnosing disease, or that a geologist follows in deciding where to dig to strike a mineral. And an endless variety of “smart” devices, such as self-regulating thermostats and automobile rotor sensors, are now being developed that are based on the decision-trees that can be built into microchips. But all of this, says McCarthy, doesn’t address the real issue. These systems are useful and clever, but are no real match for human intelligence. What is simple for a computer is difficult for human beings: chess, mathematics, and expert knowledge. And what is simple for human beings is difficult for computers: common-sense thinking.

To illustrate the problem Minsky and McCarthy offer the statement, “Birds can fly.” It is clear that this statement is usually true — but not in all circumstances. The ostrich and the penguin can’t fly. Dead birds can’t fly. Birds held down by their feet can’t fly. These exceptions seem obvious to humans, but to a computer that has been given “Birds can fly” as a statement of fact, such exceptions can wreak havoc. The study of artificial intelligence has stalled at this juncture.

The problem, according to McCarthy — and it is at the heart of all questions of intelligence — is one of organization. A machine might be stuffed with the same billions of bits of information contained in a human brain. But recalling any one bit from memory — an operation that the brain can usually perform in milliseconds — cannot be done simply by sifting through a heap. If the job were done that way, it would take days for a human to move from the living room to the bathroom, because each bit of information — What is a bathroom? Is there one nearby? How can I transport my anxious self there? — would require a full search of the brain’s contents. So the challenge is to organize huge amounts of knowledge in a way that permits humans, or computers, to retrieve it at will — to mix and match pieces of knowledge and to establish permanent links between them so one will bring up the other.

McCarthy offers one approach to the problem, Minsky another. In Minsky’s scheme, information stored by the computer or brain is handled in “frames.” A “frame” is something like a context or dominant idea in an argument — a concept (“bird”) with many other related concepts or bits of information (“feather,” “flight,” “egg-laying”) attached to it in slots, or “subframes.” Each frame, of course, is connected: Calling up one may lead to calling up another, and so on. Thus, in Minsky’s frames, knowledge is linked in chains of association, but is always dominated by the frame.

McCarthy’s approach is to create a new form of logic, called nonmonotonic logic, that can tolerate ambiguity without losing the rigorousness of mathematical reasoning. In mathematical logic it is easy to make the statement, “A boat can cross a river.” In the real world this may be true, but boats may also leak or be missing oars. In logic these conditions might be accounted for simply by tacking them onto the first statement: “And there must be no leak and there must be oars.” But there are bound to be additional unanticipated disasters awaiting boaters. McCarthy’s solution is to say: “The boat may be used as a vehicle for crossing a body of water, unless something prevents it.” In ordinary mathematical logic this would not suffice, because every exception must be laid out item by item. But McCarthy’s approach provides a way of going ahead with incomplete information. If, for example, the computer hits the phrase, “unless something prevents it,” and finds nothing entered beneath that phrase — no leaks, no lost oars — it will continue on. If, on the other hand, it encounters “leak in the boat,” it will turn down a new path dealing with leaks, water, and repair. Using such a chain of interrelated logical statements it is not necessary — as it is in Minsky’s system — for one concept to dominate.

McCarthy admits that this is probably not how the human brain works, “but this is AI, so we don’t care if it is psychologically real.” Minsky’s frames come no closer to modeling the power and flexibility of human thought. And neither method has proved practical so far in building intelligent programs. But then, neither man believes that machines will achieve anything approaching human intelligence any time soon.

Meanwhile, McCarthy allows his thoughts to roam freely. A rather shy man, he possesses an extraordinary ability to concentrate his mind on a single idea — to step wholly into it. “A large part of his creativity,” says one colleague, “comes from his ability to focus on one thing. The hazard of that is, everything else gets screwed up.”

Born to an Irish Catholic father and a Lithuanian Jewish mother, McCarthy was raised, along with his younger brother Patrick, by parents who abandoned their religions to embrace atheism and Marxism. Thrown out of Cal Tech for refusing to attend physical-education classes, McCarthy was among the last Americans drafted into World War II. After the war he returned to Cal Tech to earn his bachelor’s degree, then went on to earn his Ph.D. at Princeton. In 1956 he was offered his first teaching position, in the mathematics department at Dartmouth. In 1957 he moved to MIT, and in 1963 accepted an offer to head his own department at Stanford.

A widower and father of two daughters (his second wife Vera died in a climbing accident during the 1980 all-women ascent of Annapurna), McCarthy is a bit awkward socially, sometimes ignoring the usual conventions. But though he may appear absentminded and distant, his interior life seems to be one continuous stream of ideas — not only in the fields of mathematics and computing, but also in politics, literature, music, or plumbing. An avid reader of science fiction, he is also the author of a number of stories that, thus far, remain unpublished. When his home thermostat malfunctioned some years ago, causing the temperature in some rooms of his house to climb above 80 degrees F, McCarthy was inspired to write an unpublished philosophical treatise — available to anyone who cares to call it up on Stanford’s computerized “memo system” — on the subject of whether it is proper to say that thermostats “believe”and can have “mistaken beliefs.”He once had to struggle with moving a piano up a flight of stairs. Soon afterward he was deep into the problem of transporting heavy objects over difficult and uneven terrain. His answer: a cleverly designed, six-legged mechanism that could carry a piano up and down stairs.

In this interview, conducted at McCarthy’s Stanford home and in a local restaurant by Philip J. Hilts, national staff writer for The Washington Post and author of Scientific Temperaments: Three Lives in Contemporary Science, McCarthy leaps from mundane questions to fantastical proposals, calculations, and other oddities. This is John McCarthy. His mind does not remain on factual, earthly matters for more than a few seconds before it is again taking off into another fanciful possibility — some of which he has thought about a great deal. What follows may not be the conventional interview, but then, McCarthy is not the conventional scientist.

- OMNI

I want to ask you first of all about the robotic arms and eyes coming into use in industry. So far the main application of these things is in the assembly line.

- McCarthy

Yes, so far this is the main application of robotics. One of the things that has happened recently is a great proliferation of little devices of different costs; you can buy something for as little as 1,500 dollars that can move its hands around and pick up things — a sort of toy for hobbyists. It’s controlled by a microprocessor. I’m not sure what its limitations are. It’s not very strong, however, and it may not be very reliable. If you tried to run it all day it might break down. Like almost all robotics in use today, it does not have a mind. Because of this, what we have is very limited.

- OMNI

But there has been some progress toward making mechanical arms and robots smarter?

- McCarthy

There are two directions in which things are advancing: force-sensing for the mechanical hands, and specialized systems of vision. The original robots had no mechanism for sensing how much force their hands were exerting; so the commands were simply commands for specific motions. Now that meant most things did not quite fit. There had to be sufficient give in the object being picked up to make up for the limitations of the robot arm. More recently the arms have been fitted with force sensors so that the servoing, or servo-mechanism — the program that controls the arm — actually measures force. In other words, suppose you want the robot to put a slightly tapered peg in a hole. The robot hand would move the peg downward and begin to sense a force on one side of the peg, which would tell the machine it hasn’t got the peg quite centered; so the hand would move the peg over a little bit.

- OMNI

And in the area of vision?

- McCarthy

There are several things being done. One is to seek out special cases in which you don’t have to solve the whole vision problem — in which the robot doesn’t have to see or have full recognition of three-dimensional objects as a human does. There are many problems that can be solved by using plane views; so you have only a two-dimensional problem to worry about, rather than a three-dimensional one.

- OMNI

Give me an example.

- McCarthy

Suppose you have flat parts moving on a belt. A part may be in one position or it may be rotated, but the robot can use a template to identify it and its orientation. You can have a fixed camera up above, looking straight down, and the program can rotate a template until it matches the shape of the object in its current orientation.

Another specialized vision problem is flight simulation. It’s fairly complicated to represent the moving landscape ahead of, and under, an airplane, chiefly because parts of objects are always hidden from any particular view of a scene — the far side of hills and so on. Well, there are now programs for simulating the continually changing views and conditions of flight.

- OMNI

How much specialized programming has found its way into industry?

- McCarthy

These things aren’t in industry, except for the computer simulation being used in movies and so on. In general I think people are far less ambitious about getting vision and manipulation into industry than they were, say, fifteen years ago.

- OMNI

Really? Why?

- McCarthy

Well, I’m not sure. Mainly, I think, because the problems are very hard and as people have begun to work on the very hard problems of vision and manipulation, they’ve identified easier subproblems. In the beginning people said, “I want my robot to do what a human does.” But part of the progress has consisted of identifying easier subproblems, the solutions to which are nonetheless useful in themselves. Specific factory-automation devices are one set of easier things that don’t go a long way toward solving the real problem of how vision works. Another system that has been worked on here at Stanford is one that can look at an aerial photograph of San Francisco Airport and pick out the airplanes — distinguish them from buildings and vehicles, and see airplanes that are partly obscured or hidden.

- OMNI

This is from an aerial photograph? It could pick out certain objects, such as missiles on the ground?

- McCarthy

Yes, it could. The Defense Department is paying for its development. But it’s also a good scientific problem — being able to take a whole scene and find all the similar objects in it.

- OMNI

What’s the chief problem to overcome here?

- McCarthy

I don’t know. It always seems to me we ought to make faster progress in robotics than we do. When I first started on robotics in 1965 or so, we stated in our first proposal that we would get a robot to assemble a Heathkit [a build-it-yourself electronics kit]. It’s still not entirely clear to me why that proved impossible.

- OMNI

You actually got a kit and tried it?

- McCarthy

No. The robot arms were never flexible enough to do the mechanical motions, nor did we have programs to control them. The old-fashioned Heathkits involved threading, bending, cutting, and soldering wires. It required considerable dexterity and sophistication to know where and how much force to apply; I don’t think we were even close to it.

- OMNI

Are we getting closer now?

- McCarthy

No. I think everyone’s working on the easier problems. In my view, what everyone wants eventually is not only a robot that will take its place in the assembly line, but a “universal manufacturing machine.” This would be more like one robot that could make a whole TV set, a whole camera, or a whole car. The robot might have several arms and a collection of tools. But it would be interesting if you could sell the thing, if you could go to your neighborhood assembly shop and say, “Well, I’d like that TV from the catalog, but with this additional feature.” The TV would be made by one machine. So you could retain the low cost of mass production, but still get individuality and custom design, as if it were handmade. What I would like with these automated means is to extend the power of an individual, so that one man could build a house or car for himself.

- OMNI

How would you do that?

- McCarthy

Well, rent a gang of robots, as it were. As it is now, whenever you see a construction site, none of the cranes and bulldozers bear the name of the company that’s doing the construction. They bear the name of the company from which the equipment is rented. So first you’d design the house or car, and the design would go through a lot of computer testing; you’d simulate the construction before you began and the computer would tell you exactly what equipment to rent. You’d rent the robot equipment and it would build the house or car.

Let’s not imagine this is something the average person would do. The Rockefeller Foundation had a slogan around 1910, largely forgotten now, which was “Make the peaks higher.” It meant take the best existing research institutions and make them still better — the direct opposite of equality. And from the point of view of increasing what a particular individual could do versus what everybody could do, one would also like to make the peaks higher. And what can be done by one person, or a small group of people, has increased as technology has advanced. I believe robotics can advance that a lot more.

- OMNI

I hear many inventors complaining that they have no way of approaching corporations — that they’d like to do something but they can’t. I guess being able to rent robots would help.

- McCarthy

Yes, right. But of course, half of the inventors are crackpots. As for the other half — even the guys who aren’t crackpots — 90 percent of their inventions aren’t going to make it.

I had an experience trying to market an idea. In fact, I’m still convinced the idea is practical.

- OMNI

Can you tell me what it is?

- McCarthy

I suppose so. Since I can’t make money out of it I’ve recently been trying to give it away. It’s a computer mail terminal. You could buy this thing from a department store, and then you could type on it: MAIL THIS MESSAGE TO SO-AND-SO. It would be connected to the telephone system, and one computer would call up another terminal and deliver the message. It seems to me that inventing the thing itself was easy enough, but to persuade some company to make it was harder. My partner and I had a lot of contacts and interviews with prominent companies; so we didn’t have any problems getting attention. Nevertheless, not one of them decided to produce the terminal.

- OMNI

Would it be cheaper than a home computer?

- McCarthy

It would be more expensive than some home computers and less expensive than others. Something like the Sinclair couldn’t do it. It wouldn’t be enough computer. It doesn’t have enough storage to store messages or enough display to permit you to conveniently compose messages and so forth. So it would be a specialized home computer.

Now, programs for that purpose and the necessary equipment to attach to the phone system are probably being developed for home computers. But no universal convention exists that would permit any home computer anywhere in the world to send messages to any other home computer anywhere in the world. I think there’s a good chance IBM will develop one. At least, I tried to give them the idea.

- OMNI

What do you think will ultimately be made possible through robotics, and what forms will robots eventually take?

- McCarthy

It seems to me that what can be done and what will be done don’t exactly coincide. There’s an enormous variety of things that can be done. The extreme example would be machines built along the lines of the science-fiction-story robots.

- OMNI

Humanlike things that walk around?

- McCarthy

Yeah.

- OMNI

Is that practical? I mean, is there any use for something like that?

- McCarthy

In some sense science fiction’s portrayal of robots involves a kind of sociological imagination. During the Twenties and Thirties robots were depicted in films and stories as an enemy tribe that attempted to conquer the world, and our hero wiped them out. By the Fifties robots had become an oppressed minority and our hero sympathized with them. But those ideas had little to do with human needs. They had to do with literary needs. Now, Isaac Asimov, who is the most popular writer to write about robots, has formulated these laws of robotics in which he almost intentionally confuses natural laws — laws of motion — with legislated laws. He implies that his legislated laws — that a robot should not harm a human being, for example — are in some sense natural laws of robotics. And then he writes these almost Talmudic stories in which the robots argue about whether something is or is not permissible according to the law. Well, that, of course, is also literary.

Now, what shall we want? One thing that seems reasonably clear to me is that making robots of human size and shape is the least likely. Rather more practical would be a robot that is much smaller or much bigger than a human and could do things humans cannot do because of their size or shape. It would seem to me the first winners would be robots quite different from a human. There is, however, one advantage to robots of human shape and size: They could use facilities that were designed for human beings.

One of my ideas along these lines that is ultimately possible — and I’ve been thinking about it for many years — is the automatic-delivery system. I’d like to be able to turn to my computer terminal, type into it that I want a half gallon of milk or a new gadget and, twenty minutes later, the milk or gadget would appear automatically.

- OMNI

By what system?

- McCarthy

The first system one thinks of as a child is, of course, little trains that run along in tunnels under the streets and so forth. What’s wrong with that idea as it stands? Well, the little trains are expensive and not very fast. They can’t carry very big objects, and they require an expensive redesign of the whole city. My current scheme is as follows: There’s a nineteenth-century version and a twentieth-century version, or eco-version. The nineteenth-century version involves cables strung on poles, like the cables at ski resorts. The carriers are two-armed robots, except that they’ve got one arm like a gibbon and they hang on to the cable with one arm. They can switch cables by grabbing another one.

- OMNI

And these things somehow carry the objects being moved, and then swing like monkeys across these cables?

- McCarthy

Right. Now, the other thing that they can do is climb the outside of a building on handholds that have been built into the building. They deliver things to a box, maybe the size and shape of an air conditioner, which is built into an outside wall. And after a while you hear these clanking noises, and what you’ve ordered appears in this box.

Now, in the eco-version, which is much more expensive, these things are in tunnels under the streets, so you don’t have them clanking around overhead. But the idea that they would either come down from their poles or come out from underground and climb outside the building strikes me as essential in order to make them compatible with present buildings.

- OMNI

We could have a little tube running up the side of the building.

- McCarthy

Yes, but remember, not everybody would subscribe to the service at first. Not many of us are of the generation that remembers the installation of electricity. Just consider what an enormous amount of work that was. You look at old buildings and say, “How did they ever install electricity in that house?” They had to tear up bits of the walls to run the wires through. The other possibility or the other extreme possibility, is a walking robot that, after it comes down from the cables or up from underground, simply walks over and knocks on your door. In some sense that would be more flexible. Something could be delivered to someone who wasn’t a subscriber.

What I envision, actually, with regard to robots, are some fairly large social changes that would bring about a return to the Victorian Age, in a certain respect. If you had this robot to work twenty-four hours a day, you would think of more and more things for it to do. This would bring about an elaboration in standards of decoration, style, and service. For example, what you would regard as an acceptably set dinner table would correspond to the standards of the fanciest restaurant, or to the old-fashioned nineteenth-century standards of somebody who was very rich. Standards would conform to what we imagined to be those of the British aristocracy, because they had servants. People ask, “Well, what will happen when we have robots?” And there is a very good example of historical parallel. Namely, what did the rich do when they had lots of servants?

- OMNI

How many years must we wait?

- McCarthy

I don’t know. It’s not a development question. It requires some fundamental conceptual advances on the order of the discovery of DNA’s structure. Maybe once these advances are made, progress will be straightforward.

- OMNI

Would robot intelligence and human intelligence be alike? Humans are motivated by anger, jealousy, ambition, sensitivity. And in literature robots are portrayed as possessing these same motivations.

- McCarthy

I don’t think it would be to our advantage to make robots whose moods are affected by their chemical state. In fact, it would be a greater chore to simulate the chemical state. And it would probably also be a mistake to make robots in which subgoals would interfere with the main goals. For example, according to Freudian theory we develop our ideas of morality in order to please our parents. But then eventually we will pursue these concepts even in opposition to our parents. The general human instinct to assert independence is something that would require some effort to build into robots. It doesn’t seem to our advantage to make that effort.

- OMNI

What about the possible disruption — the unemployment that could be caused by robots?

- McCarthy

Well, there are two questions that have to be answered. One has to do with superrobots. In other words, what will happen when we have robots that are as intelligent as people, which is a long way off. The other has to do with simple automation, which is similar to the advances in productivity that have already occurred.

The United States and other countries have gone through various economic cycles of unemployment and full employment. These countries have also gone through various periods of rapid or slow technological development. No one has ever at tempted to correlate these things. But I think what would be observed is that there is no correlation — that periods of high unemployment are not especially correlated with periods of rapid technological advance. In fact, on the average, more advanced countries have somewhat lower unemployment than do the less technologically advanced countries. We have unemployment, but Mexico has vastly higher unemployment.

To take the extreme example, the average productivity of a worker in the United States has increased five times since, I don’t know, 1920 or something like that. So you would expect that four-fifths of the population would be out of work.

- OMNI

But obviously when automation comes in, people are out of work temporarily, and then go to something else.

- McCarthy

That’s right. Now there is an economic malfunction that causes unemployment — that causes this interaction between unemployment and inflation and so forth. But it seems to me that this malfunction has little, if anything, to do with technology. What seems clear is that nobody knows how to deal with unemployment.

- OMNI

That’s taking automation only up to a certain level. But if we go up to the next level and have smart robots, I would imagine there would be a fairly major shift in people’s ways of life.

- McCarthy

There was a soap opera of the Thirties in which a girl from the hills of Kentucky married an English lord. The question was, “Can a young girl from poverty-stricken Kentucky adjust to life among the English aristocracy, with dozens of servants and so forth?” And the answer was that one has a real hard time adjusting — sometimes it takes all of ten minutes. So it seems to me that what we would have to adjust to is being rich. It could take all of ten minutes.

- OMNI

What about the psychological benefits of being rich? If everyone had chauffeurs, maids, and servants . . .

- McCarthy

I don’t think that’s really important. If you read nineteenth-century literature, you don’t find any indication of people taking pleasure in their position relative to their servants. As far as they were concerned, servants were part of the machinery. It doesn’t seem to me that you will lose a very large part of the psychological benefits of being rich merely because other people are rich. As the saying goes, “Anybody who is anybody . . .”

- OMNI

That raises another question: How do we deal with machines? People who work with home computers have a funny way of talking about them. “It likes” and that sort of thing.

- McCarthy

In my view, verbs like believes, knows, or doesn’t know, can do, can’t do, understands, or didn’t understand are appropriately used with many present computer programs. And such language will become increasingly appropriate.

- OMNI

There’s a certain amount of humor involved when people use personal terms to refer to machines.

- McCarthy

Yes, there’s also a lot of purely metaphoric use of these phrases, even in relation to old machinery, which is not really appropriate. That is pure projection. Of course, with regard to computers, that projection takes place. But there’s also the appropriate use, and eventually the pure projection and the appropriate use will be inextricably entwined.

- OMNI

Do you think as we move toward more automation that we are going through a period of Luddism — revolt against the coming of robots? It seemed as if we were doing that for a while in the Sixties. But that seems to have subsided now.

- McCarthy

It seems to me that the cause of those incidents had nothing to do with computers. It was a social phenomenon of some kind that we don’t clearly understand. If computers were the cause, the cause hasn’t gone away. The impact of computers on daily life was much more profound during the Seventies than it was during the Sixties.

- OMNI

What about the predictions of millions of people suddenly being put out of work by automation?

- McCarthy

That’s by no means a prediction. That’s merely a speculation as to what could cause Luddism on a substantial scale, and I don’t think people would be quite so dumb as to do it. To say something else, it seems to me that if we are all to be rich there has to be a lot more progress in automating office work. More than half the U.S. population now works in services of one kind or another, and we won’t be rich unless we succeed in automating those.

Let me repeat a story someone told me about his vision of the future. A clerk in Company A hears a beep. She turns to her terminal and reads on the screen WE NEED 5,000 PENCILS. ORDER THEM FROM COMPANY B. So she turns from her terminal to her typewriter, types out a purchase order, and sends it to Company B, where another clerk reads the order, turns to her terminal, and types in SEND 5,000 PENCILS TO COMPANY A. The person who related the story told it, as far as I could tell, with a totally straight face. But what do we need those two clerks for? Why don’t those two computers talk to each other? Interorganizational communication by computers is something that’s hardly started.

- OMNI

We’re getting to the point where we have terminals that do communicate with each other. Of course, they don’t communicate much.

- McCarthy

My main complaint about technology has been the slowness with which it is developing. My impression is that the rate of technological innovation, so far as it affects daily life, has been slower, say between 1940 and 1980 than it was between 1880 and 1920. So people who complain about technological change going faster and faster are simply wrong. A lot of the complaints are in a sense complaints that technology is advancing too slowly, that the individual doesn’t see nearly enough improvement in his own lifetime.

Some important improvements are not appreciated. You don’t spend five minutes a day thanking technological improvements in sanitation and housing for the fact that you and your children don’t have TB. The normal attitude is to take health for granted until you don’t have it anymore, and then you complain. The same is probably true of wealth, insofar as technology has really contributed to your getting a higher salary than you would earn otherwise. But you don’t see the contribution that some specific invention has made to your increased salary.

It’s interesting to look at what inventions could have been introduced thirty or fifty years before they actually were — the missed opportunities where the technology was available to build them. And there are a fair number of them.

- OMNI

Name one.

- McCarthy

Well, I have a white-disc push-button combination lock on my front door. I can open it much faster than I could a key lock, especially in the dark. Mechanically it’s no more complicated than a key lock. It could have been invented one hundred years ago.

Another is the pulse jet engine. Are you familiar with it? Its only application was the German V-1 rocket during World War II. It is a very simple engine. Gasoline is squirted in and the jet explodes out the back end. The momentum as it goes up creates a vacuum that sucks air in the front, so that the thing goes “phutt-phutt-phutt-phutt.” There is nothing in the technology of that engine that would have prevented its being built in 1890, and it’s vastly simpler than a piston engine.

- OMNI

You and Marvin Minsky propose different solutions to the question of artificial intelligence and common sense. Can you give me a brief description of the two different points of view?

- McCarthy

Minsky is skeptical — one could say more than skeptical — about the use of logic in artificial intelligence. But I and some others are optimistic about the use of logic to express what a computer can know about the world. What’s clear is that some modifications are required, and I expect to make progress using various forms of formalized, nonmonotonic reasoning. And Minsky is skeptical about whether that will work.

But actually that’s not quite the whole story, because in addition to his skepticism about what won’t work, Minsky has positive ideas about what will work.

- OMNI

Can you describe his ideas in simple terms?

- McCarthy

Maybe he can! I can’t. I can mention an idea of his that I’m skeptical about. This is the notion that in any particular situation, there is a dominant “frame.” Minsky and Roger Shank, of Yale University, have pursued this idea. The restaurant frame, for example.

- OMNI

Meaning that when you walk into a restaurant, you enter a context in which you speak, act, and understand things in a certain way that would make no sense if you were, say, in a skating rink?

- McCarthy

Right. Put that way it’s almost a truism. But the notion of a single dominant frame with subframes and so forth can be contrasted with the notion that information from a variety of sources interacts to define the situation. In other words, is the situation always dominant, or is it dominated by a frame?

Here you are, interviewing me. That is a frame. One could put some slots into that. But if we actually tried following the details of the conversation, would the frame concept allow for that? It works fairly well at the top level. You have a collection of questions that you want to discuss; so at that level it works quite nicely. This interview with me is, in that respect, very similar to the interview we did for your book [Scientifis Temperaments]. Or from my point of view, being interviewed by you is similar to being interviewed by someone else. But if you’re not bored by this particular interview, that must be because it is, in some important way, different from the others. And that isn’t quite caught by the frame.

Now Shank, who writes a lot of computer programs, seems to be finding that in order to make things work he needs “packets” of information that interact with one another, no one of which is dominant. And from my point of view, I would say, “Ah, yes, Shank is moving in the direction of logic.” But how that’ll come out, I don’t know.

- OMNI

These are all approaches to the same problem — how to represent knowledge?

- McCarthy

Well, yes. Minsky and I have come to agree that the key thing is commonsense knowledge.

- OMNI

Give me a definition of commonsense knowledge.

- McCarthy

Well, compared to scientific knowledge, one might define it as events taking place in time and space, and knowledge about knowledge — things like that. If I ask you, “Is Andropov standing or sitting at this moment?” you will say, “I don’t know.” And if I say, “Think harder,” you’ll say, “That won’t help.” The question is, how do you know it won’t help to think harder? And if I ask you, “Does Andropov know whether you are standing or sitting?” the answer certainly can’t be determined by inspecting any model of Andropov’s mind.

- OMNI

I’m trying to get a sense of the difference between a logical approach and this frame approach to representing knowledge.

- McCarthy

From a logical point of view, the ideal — and I’m not a purist — would be that general, commonsense knowledge can be represented by a collection of sentences in a logical language, and that your goals can also be represented by such a collection of sentences.

If x is a bird, and birds can fly, then x can fly — that’s one argument. I’ve been using that sentence because Minsky gave it as an example of how little logic is good for. His argument had to do with the fact that there are many exceptions. A penguin, an ostrich, or a dead bird can’t necessarily fly. But maybe in a sufficiently dense atmosphere and at sufficiently low gravity an ostrich could fly. So the exception has the potential of being true.

- OMNI

With your nonmonotonic logic you can get around all the qualifiers by adding a phrase that says, “If nothing prevents it.”

- McCarthy

Basically, yes.

- OMNI

And then to check that, you have to go elsewhere, to other sentences. What prevents birds from flying?

- McCarthy

Right. And in particular, what prevents this specific bird from flying? What, if anything? And you have to do what’s called nonmonotonic reasoning. Namely, you have to assume that this particular bird can fly, unless you know something about this bird that prevents it from flying. And the reason it’s nonmonotonic — are you familiar with the mathematical notion of monotonic function? Ordinary logic is monotonic in the conclusions that you derive from assumptions. In other words, if you add more assumptions, then the conclusion that you could previously derive can still be derived, possibly along with other conclusions. Ordinary reasoning has nonmonotonic aspects. If I tell you that Tweetie is a bird, you will infer that Tweetie can fly. But if I added the fact that Tweetie is an ostrich, you would no longer make that inference. So this requires some modification of the reasoning structure of ordinary logic in order to get this nonmonotonic character. But those of us who like logic think we can modify logic to accommodate the problems of the real world. That something of the kind was required has been known for a long time. Ideas on how to do it formally and still preserve the formal character of logic were first being developed from the middle to late Seventies.

- OMNI

Do you imagine some such logical system might operate within the brain?

- McCarthy

What operates in the brain, I think, has got to be different. But I don’t have a clear picture of it.

- OMNI

So the idea is that regardless of what actually functions inside the brain, these things can be done logically in computers? Do they have to be done logically?

- McCarthy

No, they don’t have to. You can design a computer program that will make logical mistakes.

- OMNI

Is an expert system a good example that demonstrates Minsky’s idea?

- McCarthy

Some of the expert systems work according to still a third ideological basis. It’s a belief that if you just pile things on top of one another, this knowledge can be directed — that no theory is required.

The expert systems lack common sense. The example I usually give is MYCIN, which is a Stanford system that gives advice on bacterial diseases. It has no concept of events occurring in time. It has no concept of “patient,” “doctor,” “hospital,” “life,” or “death.” It does have concepts of the names of diseases, names of symptoms, names of tests that may be performed, and so on. And it converses in a sort of English. But if you were to say to it, “I had a patient yesterday with these symptoms, and I took your advice and he died: What shall I do today?” it would just say “input ungrammatical.” It wouldn’t have understood about the patient dying. It doesn’t need that information for its purposes. But in spite of all that, it’s quite useful. To some extent it’s a kind of animated reference book.

- OMNI

That’s a very good term for it, because it eliminates the notion of common sense, which most people automatically assume is there when they see a machine making a diagnosis.

- McCarthy

MYCIN is a particularly limited system. The interesting thing — similar to what I was saying about robotics — is that people are discovering how to get around the unsolved problems and make systems that are useful, even though these systems can’t do some of the things that are ultimately fundamental to intelligence. I talked before about the usefulness of some of these very limited vision systems. and here we have MYCIN, which is useful, although very limited.

Still, I take a more basic research-oriented point of view. These people make their very ad hoc useful systems and that’s fine. But I think the fundamental advances in artificial intelligence will be made by people looking at the fundamental problems. Now, for some reason, artificial intelligence is the subject of a great deal of impatience. When it had existed only for five years people were saying, “Yeah, yeah, you’ve been unsuccessful.” But when we compare it to, say, genetics, in which just about one hundred years passed from the time of Mendel to the cracking of the genetic code . . . Now, there may have been periods when people thought they would be able to create life in a test tube by 1910 or something like that, but we don’t remember that today.

- OMNI

Why do you suppose there is this unwarranted excitement and anticipation?

- McCarthy

Well, there’s always been unwarranted anticipation in science on the part of some people. I think some of the expressions of disappointment are disingenuous — people taking the fact that it hasn’t succeeded so far as evidence that it won’t succeed at all. On the other hand, there has been some overoptimism within the field. Partly that’s because if you see only certain problems, you can imagine a plan for overcoming those problems. But if there are more problems that you haven’t seen, you will be disappointed.

- OMNI

Decades ago, long before the enactment of the Privacy Act of 1974, you advocated a bill of rights, published in the September 1966 issue of Scientific American, to protect citizens from the abuse of information, collection made possible by computers. You advocated national data banks as very important social tools, but wanted to assure that their contents would not be misused.

- McCarthy

I made a proposal for dealing with the misuse of information: that a person had a right to know what information about him was in the data banks; that he could sue for invasion of privacy; that he could challenge information in the file, and so on. I don’t know whether my article had anything to do with it, but in many places these ideas have been incorporated into laws and the thing has been elaborated upon considerably. Now I’m beginning to think my 1966 proposals were a mistake.

- OMNI

Why?

- McCarthy

To some extent they pandered to superstition — the superstition that people can and will harm you on the basis of trivial information. For example. Princeton University is worrying about whether my privacy would be violated if they release a photograph of me to Psychology Today. It’s a little bit like some primitive superstition that if you have a person’s nail clippings and a few locks of hair you can cast a spell on him, or that if you know someone’s true name you can harm him.

- OMNI

Don’t you think there is some value to personal privacy for its own sake?

- McCarthy

Yes, I suppose so. But it has been taken to extremes in Europe, especially Sweden. They have all these flaps about transnational flows of data. It’s nonsense. I’d rather build a legal fence around actions than around information.

- OMNI

What do you mean by that?

- McCarthy

Certain actions might be illegal, like discriminatory denial of credit or something. But from all this caginess it has resulted now that people think they have a right to see their letters of recommendation and so forth.

- OMNI

I hadn’t heard of that.

- McCarthy

Oh, there’s a big flap in the universities. But they’ve reached a reasonable compromise here at Stanford: A student can waive his right to inspect his recommendation. Of course nobody’s going to believe a recommendation unless that right has been waived. In other words, if I write a recommendation for somebody and it says on the form that he has the right to look at it, the person to whom I’m writing is going to say, “Well, this isn’t worth anything, because if McCarthy knows any adverse information he won’t mention it.”

And then you get busybodies getting involved in inspecting databases to be sure they don’t contain any information that shouldn’t be there. Stanford has this rule that any questionnaire must be cleared with the Committee on Experiments with Human Subjects unless it’s specially exempt. I’m supposed to get approval on all questionnaires because, who knows, one of my questions might offend somebody. So I’ve told the committee I am going to send out a questionnaire and not tell them about it. I haven’t got around to it yet — I haven’t figured out what I want to do a questionnaire on. But somebody has to defy them.

- OMNI

Well, there’s a point in there somewhere, isn’t there? Your brother Patrick was thrown out of the army for admitting to being a Communist, and then later in the Seventies he was dismissed from a post office job for refusing to sign a loyalty oath. I would think that your family’s history and experience would lead you to fear the misuse of data banks and information.

- McCarthy

But I think that the legitimate protection against misuse of information is at the level of action. In other words, the post office shouldn’t have been allowed to fire my brother.

- OMNI

But they still should be allowed to have access to various kinds of information about people?

- McCarthy

What goes into data banks should be a matter of judgment, but I’ve become convinced that there should be no restrictions on the storage and exchange of information.

- OMNI

Between, say, the FBI and the IRS?

- McCarthy

Between anybody. Even private individuals should be allowed to keep records. If you want to be sure nobody has files that he shouldn’t, then you have to be able to snoop in his files.

- OMNI

So private individuals ought to be able to go to the IRS and take a look at their files?

- McCarthy

No. Not exactly. Take another view of it: There is privacy, and there is privacy. In order to be sure that you aren’t violating my privacy, I have to violate yours. How do I know what information about me you have stored in the data bank of The Washington Post, unless I get the chance to inspect the data bank, in which case I’ll find out all sorts of things about you and The Washington Post?

- OMNI

And you ought to have that right?

- McCarthy

No. In order to cut off these reverberating violations of privacy, rules should be enforced at the level of action and not at the level of information storage.

- OMNI

So, for example the FBI may have a long file on you, but unless they use it to harass, arrest, or convict you, then nothing happens?

- McCarthy

That’s my current view of it. The cost of looking at information and deciding what is valid and so forth is enormous. Normally it’s done only when something is really important. In other words, if you take the Tylenol poisonings, all sorts of random company records that normally are not looked at are going to be scrutinized extremely carefully for whatever clues they might provide — at an enormous cost. If you wanted to examine police files to find out whether they contained information that violated somebody’s privacy, it would be almost as expensive. So what you get is something more informal.

I assume that it’s standard procedure for policemen to call each other up and say, “Well, we didn’t dare put the information in the files, but while we had this guy in jail he was talking to this other fellow who was involved in drug smuggling.”

- OMNI

I covered the Tylenol story and that is exactly what happened, because at one time the police had to rid their files of certain information. So what they did instead was to get the older members of the investigative force together and say, “Let’s go back and try to remember these files we had to get rid of.” And they did; they remembered the guys, went out, rounded them up, and questioned them.

- McCarthy

They might have forgotten some very important things. Or they might have gotten them wrong and some poor fellow whose actual offense was entirely unrelated was confused with somebody else.

Let me tell you about a calculation I’ve made. I got interested in the question of how much a safety measure can cost before you actually lose lives by spending money on it. The estimate is made the following way: Take the statistical abstract of the United States and the annual death rate by states and the income by states, and draw a regression line through that. You’ll get the result that if you spend more than 2.3 million dollars for every life saved, you are in fact losing lives. Because if that same money were randomly sprinkled through the economy by reducing taxes or something, people who received that money would, on the average, take better care of themselves. Their lives would be happier. But if a state spends more than 2.3 million dollars per life saved through a safety measure, then that state is reducing the disposable income of its citizens. In the long run it is actually killing more people than it saves.

Now take the Tylenol thing. Johnson & Johnson has already spent more than 100 million dollars withdrawing Tylenol from the market. Okay. According to my calculations they must save forty lives by doing so before they reach the break-even point. And the new packaging’s going to cost 2.4 cents a bottle. Now if you newsmen had just shut up, then we wouldn’t have had these imitation crimes, at least, and one would have been able to say, “The poisoning of drugs is a rare event and it’s not likely to occur any more frequently in the future than it has in the past. We will save more lives by not spending the money on safety caps.”

- OMNI

That’s assuming you don’t report the original crime?

- McCarthy

That’s right. Or you minimize publicity on the original crime. Merely reporting it probably has less effect than pounding away at it in the newspapers for days and days. Because psychotics probably are not regular newspaper readers, and furthermore, because of their concentration on their problems, the notion has to be pounded into them a bit more before it occurs to them to go and do likewise.

- OMNI

That theory would be supported by the time gap between the reporting of the original crime and the beginning of the wave of other poisonings.

- McCarthy

Now here was a latent disaster, or at least latent harm, that’s been sitting around for fifty years or more. Presumably the psychotics who might be inclined to do that sort of thing have existed for a long time, but nothing triggered them. I once thought about what would happen to our society if there really were a lot of poisoners — people trying to poison water supplies. Society would manage to survive, but we might spend ten percent of our GNP on security measures.

[end]

BACK TO OMNI Q&A ARCHIVES

|

This article copyright © 1983 by Philip J. Hilts. Used by permission. All rights reserved.

|

Join the OMNI mailing list

|

Q&A

Q&A